Uncategorised

Should you be scared of low performance?

Should you be scared of low performance?

What does it really mean?

If you are on the fence about developing program evaluations because you are scared of scoring ‘low’ in performance, consider the following:

- You may be pouring valuable resources (time, money, relationships, etc.) into failing programs that will never demonstrate the change you hope to see.

- Foundations and high-end donors will increasingly ask for these evaluations, and if you cannot demonstrate impact (beyond anecdotes and testimonials), you will lose funding.

If you have programs that are scoring ‘low’ in performance, it means:

- You have taken the important step of introducing program evaluations—you are further along than many others!

- You have set up outcome metrics that can help you get back on track.

- There are ‘lessons learned’ available in both the for-profit and nonprofit sectors that can help you make better programming decisions.

- You will have the support of foundations and high-end donors in getting your program redirected or closed.

Foundations and high-end donors

Foundations and high-end donors believe in the work you do and want to see you and your mission succeed. But they have limited resources to apply towards funding and want to be sure that they are investing their funds appropriately. High-end donors especially want to see their hard-earned money used well. Most of these high-end donors are business men and women who value the use of metrics and evaluation in their own businesses. They are successful because they evaluate, make hard decisions, and course correct (cut products or programs, redesign, innovate, reallocate resources, etc.). They value the business pillars of transparency, comparability, and accountability—all of which you can easily introduce into your programs.

Unless they have ties to the programs you are running, few donors will stick around if you aren’t asking the right questions and growing your nonprofit. Even if they choose to stay, they may begin questioning your effectiveness. If it were your money, wouldn’t you do the same? So, why not build their trust and your credibility?

Why not ask the hard questions and course correct?

What are things you can do to course correct?

When you find that your outcomes do not align with your target goals, you should be able to look at the measurement process and tools used that resulted in those outcomes. The learning approaches offered, the delivery mechanisms, and other variables can help you determine where improvements should be made. This reflection on your program ultimately brings you back to the design table.

You must keep an open mind and understand that changes may need to happen at any one of these levels – design, evaluation, or implementation.

First, take the results back to your stakeholders. This should not be a single group, but rather many groups involved at various levels: board members, management, employees, and probably most importantly your beneficiaries. Because of the nature of the questions and in order to elicit honest responses (the most useful), it is generally best to consult outside help with this element. A third party allows a safe place for discussion so key stakeholders can provide honest, thorough, anonymous feedback.

And yes, it is worth the cost to be sure that your data are reliable.

This process of reflection should include sharing the results and asking appropriately phrased questions to ‘drill down’ to the issue(s). Maybe it is an inappropriate fit of service or content. Maybe it is a misalignment between mission and demographic. Maybe it is a complete lack of understanding about what the beneficiaries need or want. Once the issue has been identified, ask your stakeholders (especially the beneficiaries) their ideas on how things could be improved. Then, it’s back to the design, implement, and evaluate stages.

Be sure to communicate with your stakeholders (especially your beneficiaries, staff) and funding sources (foundations and donors) to explain what you are doing and why.

Honest communication is critical to create trust and confidence that the program has the beneficiaries’ best interest in mind.

Lastly, don’t be scared to refocus and, if need be, trim your programs. It’s best to stay true to your mission and focus only on where you have a competitive advantage – what do you do well? What makes you different from other nonprofits working in the same field?

Go on, make hard decisions.

What do we do now?

- Map out your key stakeholders and beneficiaries.

- Enlist the help of a third party for your program reflection.

- Ask the hard questions through focus groups and, where necessary, interviews.

- Compile all of your information together.

- Return to the program’s mission, goals, and outcomes: Is everything aligned?

- Review your performance targets: Are the standards adequate, or did you set the bar at the wrong height?

- Determine the kind of corrective actions that are needed for program improvement: Start with the easiest changes (the low-hanging fruit).

- Clearly lay out what is to be done, by whom, and by when.

- Determine when the next evaluation will be to assess whether these changes are making the needed improvements.

- Define the implications and consequences of the plan on department policies, content, resource allocations, staffing, etc., and prioritize improvement actions based on high impact and low cost.

- Communicate:

- Celebrate and publicize your successes. Identify short-comings; do not hide or minimize them. Instead, present how you intend to improve these weaknesses, and explain what you expect from these improvements.

- Present results differently to different stakeholders; this includes both content and format.

- Provide reports that tell them what happened, why it happened that way, what the organization learned from it, and what the organization intends to improve in the future.

Remember, build trust by being transparent.

Sources

From “What Nonprofits Need to Learn From Business,” The Chronicle of Philanthropy, URL: https://philanthropy.com/article/Opinion-What-Nonprofits-Need/233892

From “Using the Evaluation Results,” University of Texas at El Paso, URL: https://academics.utep.edu/Portals/951/Step%206%20Using%20the%20Results%20in%20Evaluation.pdf

What do you need to consider about program evaluation?

What do you need to consider about program evaluation?

This is Part 1 of the Program Evaluation Whitepaper series.

Why evaluate?

There are a number of reasons why evaluation should become routine practice in your nonprofit.

- Verifying that you are meeting your mission

- Increasing the impact of services or products for your beneficiaries

- Improving efficiency in delivery of services

- Identifying failing programs

- Determining which programs (or aspects of programs) are thriving

- Identifying programs that could be successfully replicated

- Documenting results that can be used to secure funding or share with donors

According to the Performance Imperative, a recently released framework for social-sector excellence, high-performance (i.e. the ability to deliver—over a prolonged period of time—meaningful, measurable, and financially sustainable results for the people or causes served by the organization) organizations typically:

- Establish clear metrics that are tightly aligned with the results they want to achieve (both for programs and for the organization)

- Produce frequent reports on how well the organization is implementing its programs and strategies

- Make the collection, analysis, and use of data part of the organization’s DNA to ensure that data is a natural part of each staff member’s role

- Collect data that is relevant for determining how well they are achieving their desired results (they do not collect excessive information)

- Learn from other similar programs with related causes or populations

Good intentions, wishful thinking, and one-off testimonies don’t cut it. Social and public sectors are increasingly moving funds and resources towards evidence-based efforts. Regardless of size and budget, there are always incremental moves that nonprofits can make towards performance improvement.

The investment is worth it.

Are you ready to evaluate?

Building a data/evaluation-friendly culture takes time. Not everyone will be on board with a decision to move to evaluation – change is uncomfortable. Sometimes, it’s the board pushing managers to evaluate, and sometimes it is management appealing for board approval of funding for evaluations. Regardless, it’s usually a series of small successful steps at various levels that leads to larger, broader implementation.

Informing Change created a diagnostic tool that can help you determine where you are in terms of practical evaluation readiness. As stated on the site,

“This Evaluation Capacity Diagnostic Tool… captures information on organizational context and the evaluation experience of staff and can be used in various ways. For example, the tool can pinpoint particularly strong areas of capacity as well as areas for improvement, and can also calibrate changes over time in an organization’s evaluation capacity. In addition, this diagnostic can encourage staff to brainstorm about how their organization can enhance evaluation capacity by building on existing evaluation experience and skills. Finally, the tool can serve as a precursor to evaluation activities with an external evaluation consultant.”

It’s worth the time to walk through the tool to reveal any potential red flags whether it be lack of organizational context or your staff’s skills. Just because flags (may) exist does not mean that you cannot continue to move forward; identifying those flags helps you create a game plan for how to move forward with the greatest success. Among other things, these flags help you assess where you need the most help now.

What kind of evaluation do you need?

Your program evaluation plans depend on what information you need to collect in order to make major decisions. For example, do you want to know more about what is actually going on in your programs, whether your programs are meeting their goals, the impact of your programs on recipients, etc.? Understanding your intended purpose allows you to build a more efficient plan, and will ultimately save you time and resources.

As a nonprofit, you will most likely be restrained by (or at least have to consider) the financial costs of conducting program evaluations. With this in mind, the broader your evaluation, the less in-depth aspects of the evaluation will be; if deeper levels of information are more important, you will need to narrow the scope of the evaluation. Of course, it is possible to obtain both broad and specific information using a variety of methods – it will cost you more, but it is possible.

When considering program evaluations, there are two kinds – formative and summative evaluations. While they are both informative, they approach evaluations differently.

- Formative evaluations consider data in efforts to improve the program as it is being implemented.

- Summative evaluations consider the results or outcome of a program after its completion, the data of which can be used for future programming changes or new programs.

What do you want to know?

A logic model is an excellent preparation tool as it visually outlines how your organization does its work (the theory and assumptions behind the programs).

Questions must be crafted carefully and with great consideration for the purpose of the evaluation. They must also be reflective of the intended scope, be it narrow or broad.

To ensure that questions are appropriately tied to the program, it is good to refer to program objectives and goals, strategic plans, needs assessments, benchmark studies, and ultimately, the mission of your organization.

What does the process look like?

Regardless of whether you are taking on a formative or summative evaluation, the process is the same:

If you are thinking of implementing program evaluations across your organization, it is good to test your evaluation approach on one or two programs first in a pilot evaluation. In this way, you can determine what works best for your nonprofit culture. This also gives your team the time to grow in ownership and expertise of the entire process.

Who should lead this process?

Evaluations do not need to be highly complex in order to be worthwhile. For the most part, if you have staff and resources able to adequately devote time to the evaluation process, there is no need to hire a professionally trained individual or company to carry out the process. In fact, it is almost better to handle evaluations internally, as you can begin to build and integrate new skills (organizational learning) by working through this process.

The only time it is critical to have an outside professional conduct the evaluation is if it is a stipulation of a grant or funding requirement, or if there are failing programs or personnel implications that would be better handled by an objective third-party (persons outside the organization).

Having said that, it is an excellent practice to have expert review plans and questions, so that you know that your efforts will yield usable (valid, reliable, and credible) data. When possible, having an expert check in with you along the way to ensure processes are in line with the methodology further ensures that your data can be trusted. By including expertise in the process, you can be confident in your findings when presenting your findings to your donors and boards.

What do we do now?

- Before beginning, answer the following questions:

- What is our main (evaluation) question or purpose?

- Who is the main audience for this information?

- What decisions do we want to make as a result of this evaluation?

- What is our primary reason for conducting this evaluation?

- What resources (staff, expertise, budget, etc.) do we have <available to carry out this evaluation?

- What data sources do we have available?

- When do we need these findings by?

- Will this information actually be used?

The last question is a highly important question for nonprofits. If there is no commitment to make decisions based on the findings, why go through the evaluation process?

- Build your logic model. Optimally, the logic model should be created before your program is implemented to ensure that all the elements in the program link to intended outcomes. But, if you haven’t created one, then creating one for a program evaluation is imperative.

Once you have done this, you will be able to select the type of evaluation most appropriate for your needs.

Sources

From ‘Program Evaluation Model 9-Step Process,’ Sage Solutions, URL: http://region11s4.lacoe.edu/attachments/article/34/(7)%209%20Step%20Evaluation%20Model%20Paper.pdf

From ‘Basic Guide to Program Evaluation (Including Outcomes Evaluation).’ Free Management Library, URL: http://managementhelp.org/evaluation/program-evaluation-guide.htm

From ‘The Performance Imperative: A framework for social-sector excellence,’ Leap of Reason Ambassadors Community, licensed under CC BY ND https://creativecommons.org/licenses/by-nd/4.0/

From ‘Logic Model Development Guide,’ W.K. Kellogg Foundation, URL: http://www.smartgivers.org/uploads/logicmodelguidepdf.pdf

From “Developing a Plan for Outcome Measurement: Chapter 3 Logic Models”, URL: http://www.strengtheningnonprofits.org/resources/e-learning/online/outcomemeasurement/default.aspx?chp=3

How do you develop an evaluation plan?

How do you develop an evaluation plan?

This is Part 3 of the Program Evaluation Whitepaper series. The previous papers covered what you should consider before starting a program evaluation and how to get started. Be sure to read those papers before diving into writing your plan.

What have you already determined?

For an evaluation, you should have done the following:

- Developed your outcome statements

- Mapped out your outcome progression sequence

- Identified your performance indicators (and targets if you have baseline information already)

- Discussed with your team and leadership which outcomes to initially pursue (Note: We suggest that you start out tracking only a few outcomes and then adding others as you feel more confident in your ability). That is, what are your “need to knows”?

So what now? The next step in the process is to get started writing the evaluation plan.

How do you get started?

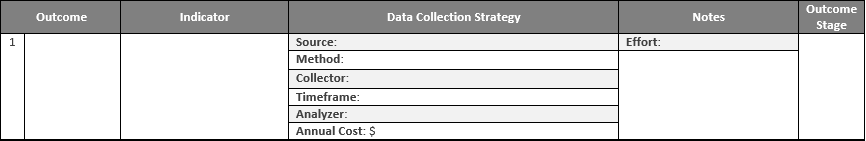

- Outcomes – the broader change you want to see

- Indicators – what you will use to measure

- Data collection strategy – how you will measure

- Notes – references to possible survey measures (It’s good to note whether the data collection method will need a considerable amount of time and money for the creation of the assessment tool in addition to the actual collection and analysis of the information. You can include “low,” “medium,” or “high.”)

- Outcome stage – short-, medium-, or long-term outcomes (refer to the logic model)

We propose something that looks like this:

At the end of this document, you will see an example of how all of this is put together.

What about data collection?

Aside from inputting the outcomes and indicators, answer the following questions in order to complete the plan:

- What is the source of the evaluation information?

- Program director, staff, program participants, etc.

- What methods will be used?

- Surveys, interviews, focus groups, observations, etc.

- Note: document review (records, reports, census data, health records, etc.) is closer to activities and outputs for implementation evaluation

- Who will collect the data?

- Program administrators, staff, external consultants, etc.

- When will the data be collected?

- Ongoing, quarterly, annually, etc.

- Who will summarize/analyze the information?

- Program administrators, staff, external consultants, etc.

- What is the annual cost for this evaluation?

- You may not know this upfront, especially if you are new to evaluations. It would be a good idea to track costs for a specified time in order to estimate costs going forward.

If you are new to research and evaluation, you may need to consider adding account details fit for research and development expenses. Add as much detail as possible (even down to geographic location) without it becoming too laborious to track. By creating this detail, you will be able to better understand the breakdown of costs, variances between projects, differences in costs between states or countries (if you are an international nonprofit), etc. Pivot tables can be your best friend. Suggestions for details include:

- Salary and benefits (your staff’s time)

- Purchased materials (cost of paper, licenses, etc.)

- Independent contractors (data collection or analysis experts, etc.)

- Equipment (tablets, phones, etc.)

- Travel (airfare, gas, mileage, rental cars, etc.) – consider organizing this information on separate lines

- Other details relevant to your nonprofit

When considering surveys and quantitative data, always:

- Provide context for the participants. This includes:

- Who you are surveying

- Why you are surveying

- What you will use the information for

- How long the survey should take

- Whether the information collected will be kept confidential or anonymous, as well as who will have access to the data

- How to express your gratitude for their participation

- Consider the time it takes to take the survey; participants will be less inclined to honestly engage in a survey that takes longer than 10 minutes—be sure to time it!

- Include a healthy amount of demographic data. In doing so, you set yourself up for better data analysis. By collecting this basic demographic information, you will be able to determine for which categories of beneficiaries your program, procedures, and policies are working well and for which they are not.

- First Name, Last Name – if anonymous: DDMM (birthday), XXX (first 3 letters of last name)

- Age

- Gender

- Marital Status (if applicable)

- Education Level

- Race/Ethnicity

- Other elements that could be included: Salary Range, Employment Status, Family Size

- Consider how you will measure or chart the growth: yes/no answers do not allow for much outcome change (growth), so it is better to write questions that have scale (Likert) responses

- Ask only what is necessary

- Test the survey beforehand to make sure questions are clear

If you are submitting your outcomes for any type of grant (foundation or federal), get some extra help.

This includes submitting the actual grant proposal as well. The stipulations for data collection and analysis are far more restrictive and demanding. You risk losing your funding or not being considered for the grant if you do not have a sound data collection and analysis plan in place.

Outcomes data rarely give you reasons as to why the outcomes have occurred.

Outcomes data merely identify what works well and what does not. This might be the reason many nonprofits do not engage with measuring outcomes. The question of, “What if what we are doing isn’t really making a difference?” is enough to throw any manager, CEO, or board into panic mode. But rather than being afraid of these results, nonprofit leaders should embrace them as a way of improving and bettering their programs. When you identify what is not working well, you can take a step back, redesign, and try again. This process of redesign should include qualitative research with various stakeholders (beneficiaries, administrators, providers, etc.) to pinpoint the breakdown in service or design. The “A-ha” moment is worth it.

What do you do now?

- Make sure your outcomes and indicators are clear and defined.

- Make sure you have only included “need to know” items, especially if you are new to this process.

- Brainstorm data collection methods for obtaining your data.

- Evaluate the time and energy required for these efforts.

- Put all of the information together into the Evaluation Plan.

- Review.

- Make a timeline. Be sure to include the necessary preparation time for any instrument development required. Timelines can get very complicated depending on how much information you wish to include.

- Review again.

- Start the evaluation process.

Evaluation Plan Outline for Data Collection (example)

Evaluation Plan for Data Collection (blank)

Sources

From “Evaluation Plan Workbook,” Innovation Network, URL: http://www.innonet.org/resources/eval-plan-workbook

From “Building a Common Outcome Framework to Measure Nonprofit Performance,“ Urban Institute, URL: http://www.urban.org/sites/default/files/alfresco/publication-pdfs/411404-Building-a-Common-Outcome-Framework-To-Measure-Nonprofit-Performance.PDF

From “Candidate Outcome Indicators: Youth Mentoring Program,” Urban Institute and the Center for What Works, URL: http://www.urban.org/sites/default/files/youth_mentoring.pdf

How do you get started with your program evaluation?

How do you get started with your program evaluation?

This is Part 2 of the Program Evaluation Whitepaper series. The previous whitepaper covered what you should consider before starting a program evaluation. Please read that paper before continuing on.

What have you already determined?

For this evaluation, you should have determined the following:

- Main or over-arching question(s)

- Main audience

- The primary reason for conducting this evaluation

- The resources (staff, expertise, budget, etc.) available to carry out this evaluation

- The types of data sources available

- The timeline or due date

- The decisions to be made based on the results

- Most importantly, a commitment to use the information found

Going through the question-and-answer process, you have probably already determined whether you need an evaluation that informs your audience about the program as it happens (formative) or whether you need an evaluation that looks at the completed program (summative).

Couple this with the resources available to you, and you probably have a pretty good idea of your scope. The fewer the resources, the more narrow and less involved the evaluation process will be.

After you have determined the scope of your evaluation, you can get started writing questions.

What is the big picture?

The tricky part about writing questions is keeping your questions tied to your evaluation purpose and not allowing yourself to get sidetracked with extraneous questions. Your over-arching question is never really answered by itself in an evaluation; it is answered by compiling the answers (evidence) from a number of sub-questions.

It is important to get your sub-questions (i.e., your evaluation questions) right.

How do you frame questions?

When thinking about your questions, reflect on them in terms of the considerations above, along with whether or not you are looking to evaluate process, impact, or outcomes. Process evaluations help you see how a program outcome or impact was achieved, while impact and outcome evaluations look at the effectiveness of the program in producing change. Within the body of research on evaluation, you will find conflicting definitions of impact and outcome measurement—mostly about which actually comes first. The bottom line is they both look at change that occurs on the beneficiary’s part. Within the nonprofit sector, it will mostly be referred to as outcome measurement (we will refer to outcomes in this whitepaper as well), and these measurements will focus on changes that come from program involvement. These changes are shifts in knowledge, attitudes, skills, and aspirations (KASA), as well as long-term behavioral changes.

Most funders are concerned with nonprofits reporting outcomes.

Funders want to know, “Are you making a difference?” Outcome data answer that question to keep the focus there. Process questions may look like, “What problems were encountered with the delivery of services?” or “What do clients consider to be the strengths of the program?”; however, outcomes questions ask end-goal questions, such as “Did the program succeed in helping people change?” and “Was the program more successful with certain demographics than others?”

With the focus on actual change, outcomes questions should be asking things related to shifts in KASA that can be attributed to the participant’s involvement in the program. Furthermore, questions should be asking about things related to the ultimate goal of a particular program.

The below chart provides a summary of types of evaluation questions. When discussing methods, quantitative refers to numeric information pulled from surveys, records, etc., and qualitative refers to more subjective, open responses that capture themes. A combination of the two is generally referred to as a ‘mixed methods’ approach.

|

Evaluation Questions |

What They Measure |

Why They Are Useful |

Methods |

|

Process |

How well the program is working Is it reaching the intended people? |

Tells how well the plans developed are working Identifies any problems that occur in reaching the target population early in the process Allows adjustments to be made before the problems become severe |

Quantitative Qualitative Mixed |

|

Outcome |

Helps to measure immediate changes brought about by the program Helps assess changes in KASA (knowledge, attitude, skills, and aspirations) Measures changes in behaviors

|

Allows for program modification in terms of materials, resource shifting, etc. Tells whether or not programs are moving in the right direction Demonstrates whether or not (or the degree to which) the program achieved its goal |

Quantitative Qualitative Mixed

|

Refer to your logic model for writing your outcomes evaluation questions. Because you should have already listed outcome types (short term, intermediate term, and long term) and when they occur, your outcomes questions should be reflective of those listed in the logic model progression. Outcome questions should be written in a way that reflect the change you want to see, the direction of change intended (increase, improve, decrease, etc.), and who it is intended towards. For example:

- Improved school attendance by youth (13–17 years)

- Improved academic achievement by youth (13–17 years)

When you are ready to start writing your questions, consider these criteria:

- Are the questions related to your core program?

- Is it within your control to influence them?

- Are they realistic and attainable?

- Are they achievable within funding and reporting periods?

- Are they written as change statements: Are they asking things that can increase, decrease, or stay the same?

- Are there any big gaps in the progression of impacts?

There are some really great sources out there that can help walk you through the process of outcome measurement. One of the best sources for developing this plan is Strengthening Nonprofits’ Measuring Outcomes (see sources for direct link to document). There is no need to reinvent the wheel when it comes to resources—we can just point you to the right sources and give you some key ideas to think about.

How do you measure change?

In order to actually measure change, you need criteria for data collection. Using an example from above, improved school attendance, we only see what we desire to change, but not how it will be measured. Without a way to measure it, we do not know if we are actually making a difference; we only know that it is our desired change.

When referring to impact and outcomes, measurements come in the form of performance indicators. Indicators are measures that can be seen, heard, counted, or reported using some type of data collection method. To measure your performance, you need a baseline (starting point) and a target (goal). For each impact or outcome statement (evaluation question), you should establish baseline indicators and target indicators in order to effectively evaluate your performance.

Considerations when writing your indicators:

- Are they specific?

- Are they measurable?

- Are they attainable?

- Are they realistic?

The great thing is that there are a number of groups who have already put together lists of indicators by sector. Be sure to check out Perform Well, The Urban Institute’s Outcomes Indicator Project, the Foundation Center’s TRASI, and United Way’s Toolfind.

What do you do now?

- Write your outcome statements: who, what, and how.

- Who will be impacted by the program?

- What will change as a result of the program?

- How will it change?

- Write your criteria (performance indicators) for measuring your outcomes.

- For each impact or outcome statement, you generally need 1–3 indicators (depending on complexity)

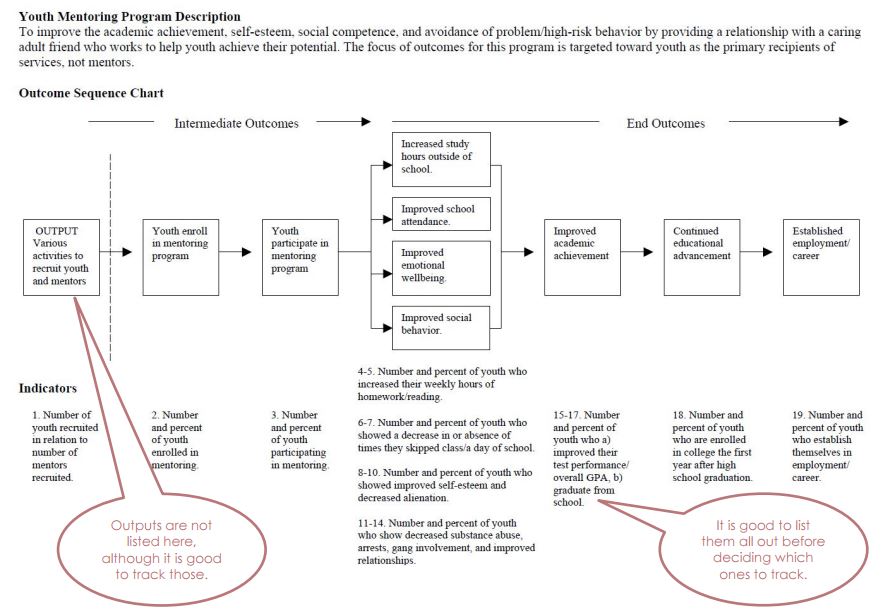

- Map them. Map out your envisioned outcomes sequence (again, referring to your logic model), along with your intended indicators.

- The example on the next page is taken directly from the Urban Institute’s Outcomes Indicator Project for a Youth Mentorship program.

Once you have done this, you will be able to select the type of evaluation plan appropriate for your needs.

Sources

From “A Framework to Link Evaluation Questions to Program Outcomes,” Journal of Extension, URL: http://www.joe.org/joe/2009june/tt2.php

From “Developing A Plan For Outcome Measurement,” Strengthening Nonprofits, URL: http://strengtheningnonprofits.org/resources/e-learning/online/outcomemeasurement/Print.aspx

From “Measuring outcomes,” Strengthening Nonprofits, URL: http://www.strengtheningnonprofits.org/resources/guidebooks/MeasuringOutcomes.pdf

From “Outcomes Indicators Project”, URL: http://www.urban.org/policy-centers/cross-center-initiatives/performance-management-measurement/projects/nonprofit-organizations/projects-focused-nonprofit-organizations/outcome-indicators-project

From “Candidate Outcome Indicators: Youth Mentoring Program,” Urban Institute and the Center for What Works, URL: http://www.urban.org/sites/default/files/youth_mentoring.pdf

Why should you think about needs assessments?

Why should you think about needs assessments?

This is Part 1 of Needs Assessment Whitepaper series.

What is a Needs Assessment?

A needs assessment is the active systematic step of gathering accurate information that represents the needs and assets of a particular community or target group.

- A “need” is the gap between “what is” and “what should be”

- A “target group” are specific groups in a system for whom the system exists (ex. parents, students, etc.)

- An “asset” is something that can be used to improve the quality of life

- A “system” is a set of regularly interacting and interdependent elements that form a unified whole and organized for a common purpose

Needs assessment findings are used to define the extent of the need within a community and the assets that are available to address the needs within that same community.

Organizations can only make the most effective, appropriate, and timely programs and services by knowing these elements.

The assessment of these needs are usually conducted as part of a strategic planning process, where programs or services are being conceptualized, developed, or revamped. Characteristics of a needs assessment include:

- Starts with a situation analysis

- Focuses on the ends (outcomes) versus the means (process)

- Gathers data through established procedures and methods selected for specific purposes

- Identifies priorities and determines criteria for solutions

- Identifies essential resources within your organization

- Identifies essential resources your organization needs to acquire

- Determines how to use, develop, or acquire those resources

- Leads to actions that will improve programs, services, operations, and organizations

- Integrates qualitative (ex. focus groups and interviews) and quantitative (ex. surveys) methods

Needs assessments can be used in many different areas including:

- Education & Curriculum Development

- Public Health

- Training

- Community

Given the focus on nonprofit work, we will focus on the community needs assessment. Needs assessments generally focus on one of three areas:

- Evaluating the strengths and weaknesses within a community and creating or improving services based on the identified weaknesses. Organizing this type of needs assessment is primarily structured around how to best obtain information, opinions, and input from the community and then what to do with that information.

- Addressing a known problem or potential problem facing the community. It centers less on direct involvement with the community and more with the governing bodies, stakeholders, businesses, etc. that will potentially be affected.

- Improving efficiency and effectiveness within an organization (organizational-focused).

Why are Needs Assessments important?

Communities and organizations, like living organisms, are in a continual state of change. Political, economic, and social variables brings shifts in the demographics (ex. age, ethnicity, unemployment rate, etc.) of each community. Programs and services that were created for a community or particular audience years ago may not be what is needed (or wanted) at present.

As a nonprofit, if your purpose is to serve the community, then…

You need to stay informed about the change that’s occurring within your community.

When your nonprofit takes an active role in understanding the community, a natural by-product may be exactly what it takes to get your program and service going. By interacting with the communities in which you serve and utilizing their input and feedback in your planning, you are increasing their understanding of their needs (their gaps), why they exist, and why the gaps must be addressed. And, when people are involved in the process from the ground-up, they are more likely to pursue the programs and services that are being developed and less likely to resist change. Addressing their desires and concerns head-on can build a sense of ownership in the community. Understanding why services or programs are being offered empowers community members to utilize them.

Bottom line, needs assessments are used to guide decision-making by providing a justification for why your programs and services are needed.

Who is involved?

Needs assessments should involve multiple people in each step of the process. They can be conducted:

- By a single organization, or

- Through a collaboration of community partners (such as other nonprofits, community organizations, foundations, universities, or government entities)

There is always a needs assessment committee or management team that manages the needs assessment process; this team and its responsibilities are discussed in the next whitepaper entitled, “How do you conduct a needs assessment?”

What does the process look like?

There are essentially three steps in conducting a needs assessment:

The next whitepaper lays out the steps in detail, but for now, consider the initial steps below.

What do you do now?

- Consider whether your organization is serious about program efficacy, or will you continue to do what you do because that is what you are known for or that is simply what is board directed. In some cases, your hands are simply tied.

- If you are serious about it, is your board, leadership, and management willing to undertake the time and provide the resources required to conduct a needs assessment?

- Similarly, consider whether after having done a needs assessment if your nonprofit is flexible or adaptable enough to make the necessary changes. If not, think about the steps you will need to take to prepare your organization for change (change will never be easy, but there may be things you can do to convince or, better yet, demonstrate the need – perhaps present case studies of other similar nonprofits).

- Consider whether your organization will move forward alone or with a partnership for the assessment.

- From your organization, begin thinking about who would participate on the needs assessment committee to gather data, brainstorm ideas, and prioritize decision-making; this group should be utilized at every point in the needs assessment as a method of accountability. Suggestions for who should be on this committee are presented before the Sources page in this document.

Committing to a needs assessment to maintain your relevance within a community is, for many, a tough pill to swallow. It may mean having to reformat programs, products, or locations in order to be the most effective with the resources you have. But, if community change and social impact are your goals, you most certainly need to make sure you are needed.

Considerations for the Needs Assessment Committee (NAC)

- Members should include a cross section of stakeholders, clientele, and partners

- Avoid very strong personalities or excessive talkers; set boundaries and rules that prohibit someone from dominating the discussion (ensure these rules are clearly articulated at the first meeting)

- Consider prior history if there are groups or committees that have been included before and should remain connected

- Make deliberate choices, don’t pick a random group of people because they have the time to serve

- One member should have data analysis skills if the needs assessment leader does not

- Members should include power brokers and coalition makers

- Members should include stakeholders with vested interests in the outcomes of the study; create structures of accountability to ensure an objective account of community needs

- Ensure that the NAC has access to decision-makers (leaders) and can influence decisions

- Ideally have fewer than 10 people in the NAC, but if larger, divide the NAC into sub‐committees

Source: Oregon State University’s Needs Assessment Primer and Strengthening Nonprofit’s Conducting a Community Assessment

Sources

From ‘Community Needs Assessments,’ Learning to Give, URL: https://www.learningtogive.org/resources/community-needs-assessments

From ‘Comprehensive Needs Assessment,’ US Office of Migrant Education, URL: https://www2.ed.gov/admins/lead/account/compneedsassessment.pdf

From ‘Conducting a Community Assessment,’ Strengthening Nonprofits: A Capacity Builder’s Resource Library, URL: http://strengtheningnonprofits.org/resources/guidebooks/Community_Assessment.pdf